Neural Cellular Automata (NCA) - Interactive Demo

Interactive Demo

Before diving into the technical details, try out our interactive Neural Cellular Automaton demo below. Click and drag on the canvas to erase cells and watch as the neural network regenerates new patterns:

Click and drag to erase cells. The neural cellular automaton will regenerate patterns.

Abstract

Neural Cellular Automata (NCA) offer a compelling framework for image generation through local, self-organizing update rules. However, traditional NCA models are typically limited to low-resolution tasks due to the restricted receptive field and challenges with training stability over iterative updates. In this work, we explore strategies to enable NCA to synthesize higher-resolution images. We first investigate extending the receptive field using larger, fixed Sobel filters and introduce additional edge detectors at 45 and 135 degrees to improve edge sensitivity. Our experiments demonstrate that these modifications stabilize training and improve image clarity, though some structural elements may still be missed.

To further enhance NCA's capacity, we explore replacing Sobel filters with trainable convolutional layers. We test two methods to address the gradient instability caused by iterative updates: truncated backpropagation and the use of a scale factor to regulate convolutional outputs. Truncated backpropagation improves stability but suffers from slow convergence, while introducing a trainable scale factor significantly accelerates training. Despite these advancements, the scale factor method does not significantly outperform traditional Sobel-based NCA in final image quality.

Our findings highlight the potential of combining classical image processing techniques with trainable neural components to scale NCA to higher resolutions. The integration of convolutional neural networks opens new directions for future research, potentially enabling more complex and precise pattern generation through self-organizing systems.

Introduction

One of the most fascinating phenomena in the macroscopic world is how microscopic entities, completely unaware of the whole, can collectively create structures that are both beautiful and governed by emergent rules. In classical economics, this is reflected in how individuals, each pursuing their own self-interest with no knowledge of the broader system, inadvertently build efficient markets that allocate resources effectively. Similarly, the one-electron universe hypothesis suggests that all electrons in the universe could, in principle, be manifestations of a single electron traveling back and forth through time.

In computer science, Conway's Game of Life offers a glimpse into this wonder. It amazes many beginner programmers with how simple, local update rules can lead to unexpectedly complex patterns. Neural Cellular Automata (NCA) is a powerful extension of this idea. In NCA, a neural network acts like a creator, not by directly designing the final image, but by defining microscopic pixel-level rules. Each pixel knows nothing beyond the state of its immediate neighbors, yet through the passage of time and iterative updates, chaotic randomness evolves into the precise pattern the neural network intended to create. In other words, NCA trains a set of rules such that, according to these rules, a chaotic and disordered image can eventually self-organize into the desired pattern and stably maintain it.

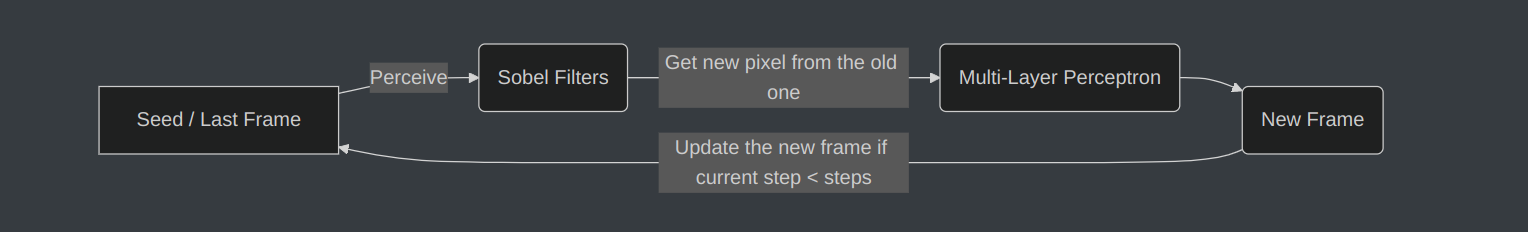

Traditional NCA Framework

In traditional NCA implementations, the system typically starts from a single active pixel positioned at the center of the canvas. The image channels are expanded from the standard three (RGB) to sixteen, with the fourth channel commonly serving as an alpha channel. Pixels with an alpha value less than 0.1 are considered invisible and effectively disappear from the image. Each pixel senses its local surroundings using Sobel filters to extract edge information in both the x and y directions. This local perception provides edge information that is fed into a small multi-layer perceptron (MLP), which updates the pixel's state accordingly. This process is iterated for 64 to 96 steps, allowing the pattern to gradually emerge. After the final iteration, the synthesized image is compared with a target image to compute the loss. The model is then trained through backpropagation to refine the update rules.

However, traditional NCA has primarily been applied to the generation of low-resolution images, such as emojis or texture patterns. This limitation largely stems from the restricted expressiveness of local rules; relying solely on information from neighboring pixels makes it difficult to capture global structures. Additionally, because the image is produced over many recurrent iterations (typically 64 to 96 steps), and the same neural network is applied at each step, the system is prone to issues like gradient explosion or vanishing gradients. These problems can make the model challenging to train and often hinder its scalability to higher resolutions.

Therefore, if we aim to increase the resolution, two key challenges need to be addressed:

-

We need to expand each pixel's receptive field so that it can make decisions based on information from slightly more distant neighboring pixels, not just its immediate surroundings.

-

We need to increase the complexity of the neural network while ensuring it remains trainable. Traditional techniques such as gradient clipping and residual connections have already been employed in early NCA models, but overcoming the current limitations will likely require more powerful methods.

Expanding Receptive Field by Adjusting Sobel Filter

In traditional NCA, the next state of each cell is determined based on the eight immediate neighboring cells using a 3x3 Sobel filter. Importantly, NCA typically employs the classic, non-trainable Sobel filter rather than a trainable CNN structure. The primary reason for this is that, due to the multi-step recurrent nature of NCA, introducing trainable convolutional layers can easily lead to training instability, making the model difficult or even impossible to converge.

However, if we aim to generate high-resolution images with NCA, it becomes essential to expand the receptive field. High-resolution images often contain regions that appear locally similar but are globally distinct. If the learned update rules fail to differentiate between these regions based on broader context, the model will struggle to generate the correct global structure. Expanding the receptive field while maintaining stable training remains a key challenge for advancing NCA toward high-resolution image synthesis.

For example, if we use the original 3x3 Sobel filter without modification to train NCA for image generation, the training process tends to be highly unstable. In some cases, after 700 epochs, the loss function completely diverges to NaN. In other cases, after 10,000 epochs, the NCA might barely manage to generate a roughly similar image, but the result often remains extremely coarse and lacking in fine detail. The figure below illustrates the outcome of using a classic NCA to generate a 128x128 image. On the far left is the target image we aimed to generate. The four images on the right show the results produced by an NCA model after 10,000 training epochs, when the loss curve had already plateaued and was no longer decreasing, following multiple attempts to achieve convergence. Even in this fortunate case where the training managed to converge, the generated images still exhibit significant differences in clarity and detail compared to the original target.

Naturally, if we wish to avoid the gradient explosion or vanishing gradient issues caused by applying trainable CNN layers repeatedly in the NCA loop, one possible solution is to use larger, fixed classical image operators—such as employing a 5x5 Sobel filter. This approach can potentially increase each pixel's receptive field without introducing additional trainable parameters that would destabilize the training process.

For example, the 5x5 Sobel filters in the x and y directions can be constructed using the following matrices (when using these filters, please ensure that they are properly normalized):

In our experiments, by replacing the 3x3 Sobel filters with the 5x5 versions, we observed through extensive testing that the training process became stable. Even after 10,000 epochs, when the loss curve in traditional methods had already plateaued and was no longer decreasing, continued training with the larger Sobel filters consistently improved the quality of the generated images. After 192,000 epochs, the results demonstrated noticeable enhancements compared to earlier stages of training.

Although the 5x5 Sobel filters can generate clearer images, we observed that in some cases (the far left one), certain structures—such as diamond-shaped blocks located in the lower regions of the target image—were occasionally missing from the generated results. This may be because the Sobel filter provides only two feature extraction operators, which may not supply sufficient information as the resolution increases.

Furthermore, we can extend this idea by introducing additional Sobel-like operators that detect edges at 45-degree and 135-degree orientations. Incorporating these additional directional filters can further enhance the network's ability to capture diverse edge information, which may contribute to faster convergence and improved stability during training.

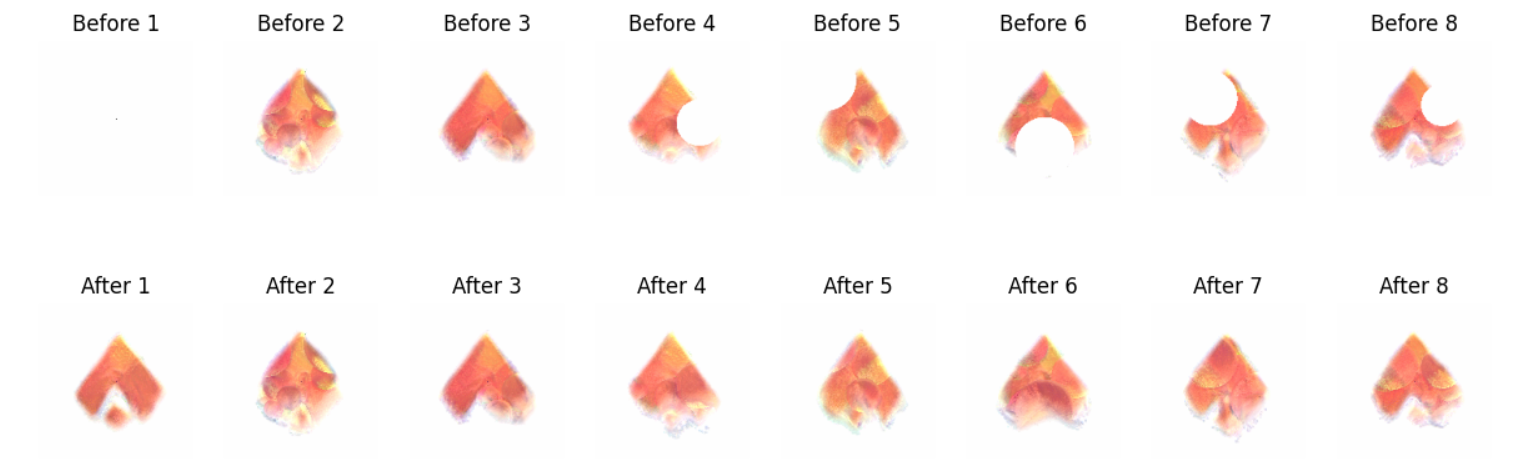

In our experiments, after 8,000 epochs, this 4-operator model was already capable of generating reasonably good images. The full 11000-epoch progression of the generated images is shown below. The first row represents the input, and the second row shows the final stable output images.

**In the future, we can explore enhancing the information extraction capability of NCA by incorporating more traditional digital image processing operators beyond the Sobel filter. **

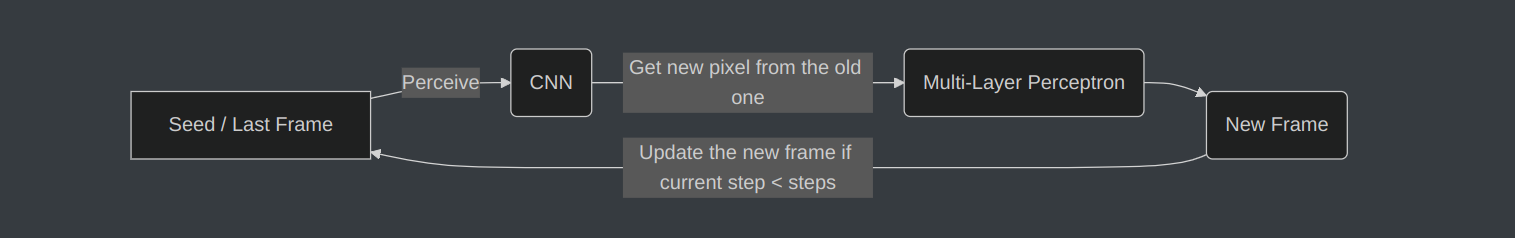

Future Exploration: Replacing Sobel Filters with Trainable CNNs

One of the milestones in computer vision is the ability of convolutional neural networks (CNNs) to learn and generate diverse image processing operators through training, moving beyond the constraints of classical operators like the Sobel filter. However, in NCA, since the final image is formed through many recurrent updates using the same convolutional layer, this setup can easily lead to gradient explosion or vanishing gradients, making the network difficult to train.

In our experiments, simply replacing the Sobel filters with trainable convolutional layers often caused the model to diverge. Common failure modes included the loss rapidly becoming NaN or the model converging to trivial solutions, such as generating uniformly colored images.

Two different strategies for implementing trainable convolutional layers within the NCA framework were tested. Using these methods, it was possible to train NCA models with learnable parameters. However, so far, these approaches have not produced significantly better results compared to the method of expanding the Sobel filters. Nonetheless, the introduction of trainable convolutions opens new directions and presents substantial potential for further exploration. By experimenting with different CNN architectures, it may be possible to achieve improved performance and reconstruct even higher-resolution images in the future.

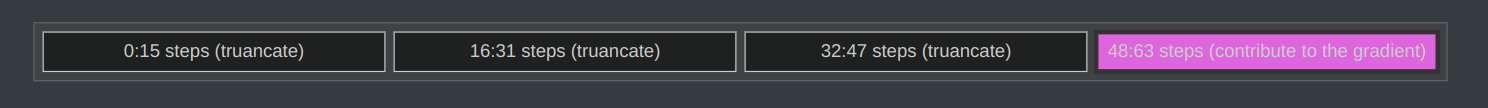

Truncated Backpropagation

The first alternative approach we explored involves applying gradient truncation during backpropagation. Specifically, we compute gradients using only the final few update steps, rather than propagating gradients through the entire sequence of updates. This strategy limits the depth of backpropagation and helps stabilize the training process. For example, in the following setup, the traditional Sobel filters were replaced with convolutional layers, offering greater flexibility to adjust structures, kernel sizes, and the number of operators.

To prevent gradient vanishing or explosion, we truncated the gradient at the 48th update step and computed the final gradient using only the last 16 update steps. This truncated gradient was then used to update the model parameters.

To prevent gradient vanishing or explosion, we truncated the gradient at the 48th update step and computed the final gradient using only the last 16 update steps. This truncated gradient was then used to update the model parameters.

The gradient computation formula is as follows:

The gradient computation formula is as follows:

Here, is the total number of update steps, is the number of final steps used for backpropagation, represents the state at step , and denotes the trainable parameters in the convolutional and linear layers.

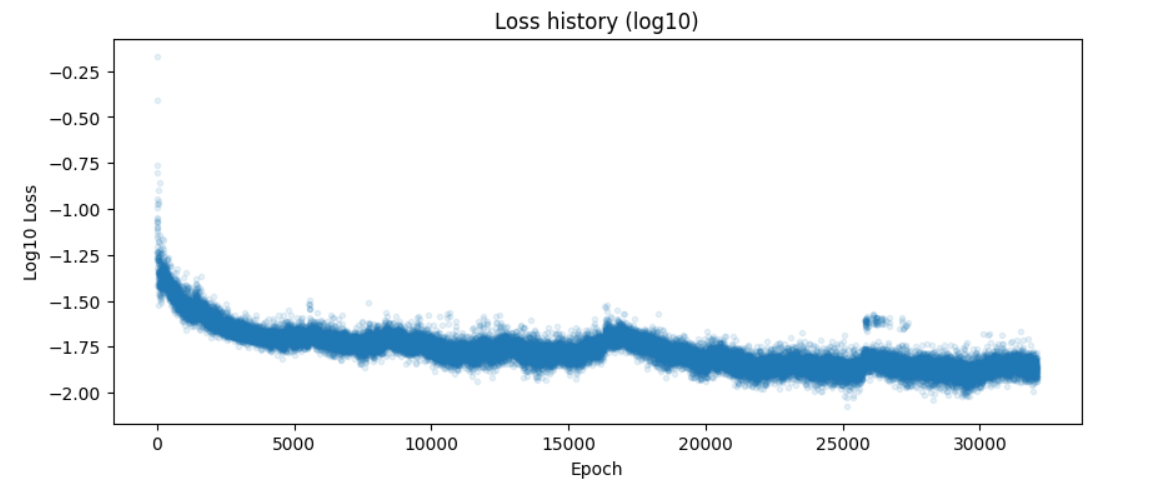

Although this method enabled training CNN layers within the NCA, it unfortunately resulted in a very slow training process. While we observed a decreasing loss function curve, the quality of the generated images remained unsatisfactory even after 32,000 epoches. It is likely that significantly more training epoches would be required to achieve the desired outcomes.

Stabilize Training using Scale Factor

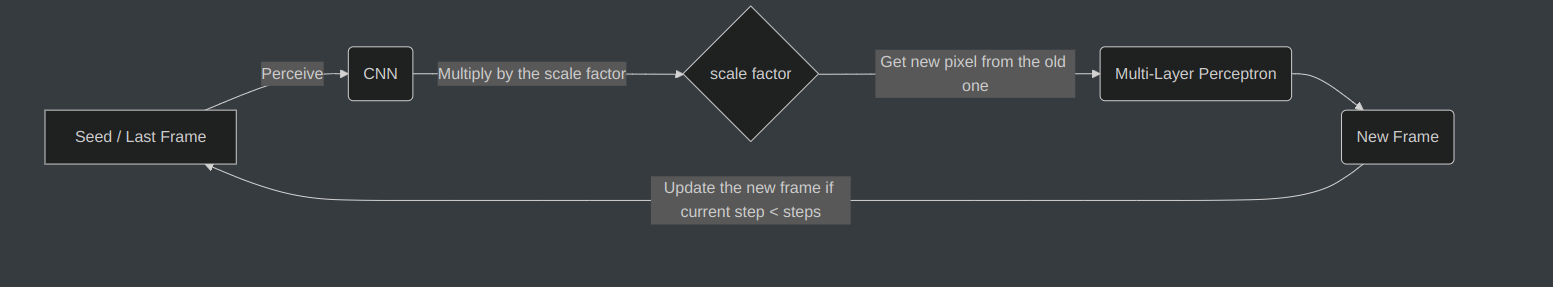

Another simple yet effective method is to introduce a scale factor to regulate the output of the CNN. The scale factor serves as a trainable parameter that modulates the magnitude of gradient features derived from the input data. These gradients, computed using trainable convolution kernels initialized as Sobel filters, capture spatial changes in the input grid. By applying the scale factor, the model adjusts the intensity of these gradient features before integrating them with the original input, ensuring their contribution aligns with the learning objectives.

The scale factor plays a critical role in stabilizing training. Without it, gradient features could produce excessively large or small values, leading to unstable updates or slow convergence in the NCA's iterative process. As a trainable parameter, the scale factor is optimized during training, allowing the model to dynamically balance the influence of gradient-based information, which is particularly important in tasks requiring precise control over dynamic patterns.

Additionally, the scale factor enhances the model's adaptability to diverse tasks. For example, in pattern generation or dynamic system simulation, different scenarios may require varying levels of sensitivity to spatial gradients. The trainable nature of the scale factor enables the NCA to fine-tune this sensitivity, ensuring robust performance across different initial conditions or grid configurations. The inclusion of the scale factor also addresses challenges inherent in NCA training, such as the tension between local feature detection and global behavior emergence. By scaling the gradients, the model can prioritize relevant spatial features without overwhelming the update process, facilitating the emergence of complex, coherent patterns over multiple time steps.

In our experiments, this method was able to generate reasonably good target images after 6,400 epochs. However, the results were not significantly better than those achieved using the NCA network with four Sobel operators. Nevertheless, the training speed was faster.

Conclusion

Through our exploration, we found that using larger traditional digital image processing operators can effectively enhance NCA's capability to generate high-resolution images. More importantly, incorporating trainable CNNs holds even greater potential. This approach allows the integration of CNN advancements into the NCA framework, opening up vast opportunities for future exploration and significant performance improvements.